Description

Why am I even writing this article? Isn’t working with blobs using the AWS S3 SDK quite straightforward?

Well, the truth is both yes and no.

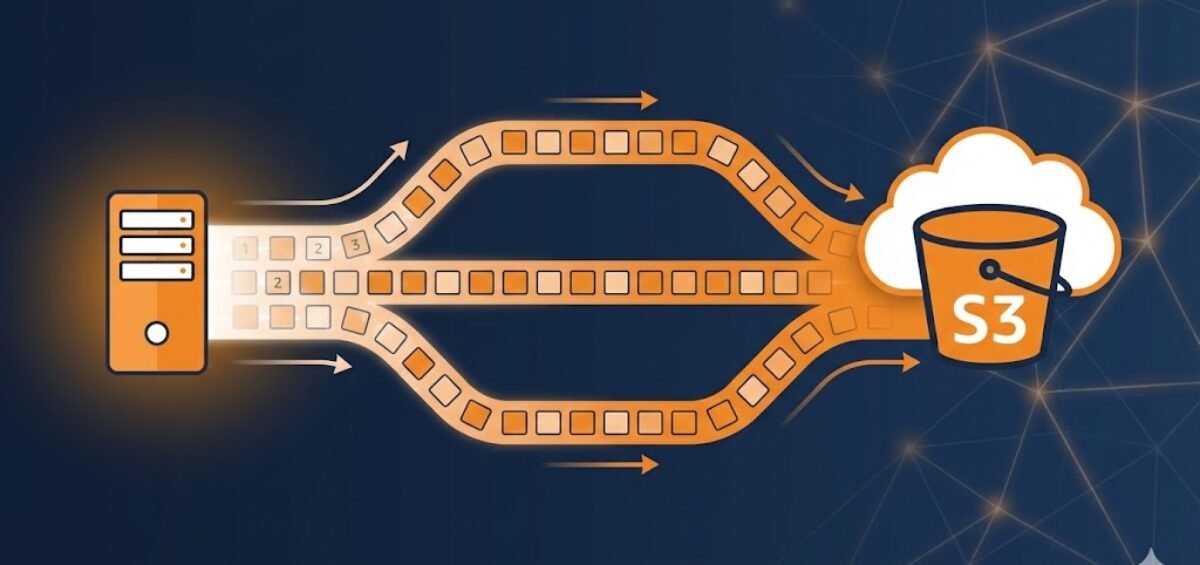

Using the SDK to upload files is simple enough—until you actually want to stream data into S3. And by streaming, I mean genuinely sending chunks of data as they arrive, rather than loading the entire thing into memory first.

But you might ask: isn’t the SDK already doing this for us when we use a Stream? The answer is no. While Stream is designed for streaming—and it’s what it does best—the SDK hides a rather annoying behaviour beneath the surface.

I only stumbled across this when I was trying to upload a stream that was read-only, non-seekable, and had an unknown content length: essentially, HttpContext.Request.Body. The reason I needed to do this is beyond the scope of this article, but the key point is that this stream is not seekable—and any attempts to make it so are simply not feasible.

So what is really happening with the SDK’s upload requests? Here is an example of a basic upload request

var request = new PutObjectRequest

{

BucketName = _configuration.BucketName,

Key = storeId.ToString(),

InputStream = contentModel.Content,

Metadata =

{

["x-amz-meta-virtual-name"] = virtualName

},

ServerSideEncryptionMethod = ServerSideEncryptionMethod.None

};

await _context.Client.UploadObjectFromStreamAsync(

_configuration.BucketName,

storeId.ToString(),

contentModel.Content,

new Dictionary<string, object>());

Why the AWS S3 SDK Stream Upload Fails

Length property.This surprised me because I thought it was streaming, meaning the length might not yet be known to our service. So why is the SDK behaving like this?I certainly didn’t experience such behaviour with Mongo’s GridFS, so I was convinced I wasn’t on the wrong path. Then I started thinking: should I just use a MemoryStream?Of course the answer is no—it’s just wrong. For small files it may be an option, but for large files, we certainly don’t want the entire content sitting in memory. And why would we?After digging, I found similar issues raised with AWS. While they claimed to have solved it, that’s not the case. At the time of writing, I’m using AWSSDK.S3 version 4.0.17.2, and the above method still fails.

Solution

Solution: Working with Non-Seekable Streams in AWS SDK – S3 Multipart Upload in C#

After following multiple suggestions and hacks (one involving using the HTTP client directly to stream chunks into S3), I found a cleaner solution.

Step1: Initiate a MultiPartUploadRequest

var initiateRequest = new InitiateMultipartUploadRequest

{

BucketName = _configuration.BucketName,

Key = storeId.ToString(),

Metadata =

{

["x-amz-meta-original-file-name"] = contentModel.FileName,

["x-amz-meta-reference-id"] = contentModel.ReferenceId,

}

};

var initResponse = await _s3Client.InitiateMultipartUploadAsync(initiateRequest);

string uploadId = initResponse.UploadId;

Step2: Read your stream in chunks and send upload requests for each one

const int partSize = 5 * 1024 * 1024; // 5 MB minimum for S3

var parts = new List<UploadPartResponse>();

int partNumber = 1;

byte[] buffer = new byte[partSize];

int bytesRead;

while ((bytesRead = await contentModel.Content.ReadAsync(buffer, 0, buffer.Length)) > 0)

{

using var ms = new MemoryStream(buffer, 0, bytesRead);

var uploadPartRequest = new UploadPartRequest

{

BucketName = _configuration.BucketName,

Key = storeId.ToString(),

UploadId = uploadId,

PartNumber = partNumber,

InputStream = ms,

IsLastPart = false // can set true only on the last part

};

var uploadPartResponse = await _client.UploadPartAsync(uploadPartRequest);

parts.Add(uploadPartResponse);

partNumber++;

}

var completeRequest = new CompleteMultipartUploadRequest

{

BucketName = _configuration.BucketName,

Key = storeId.ToString(),

UploadId = uploadId,

PartETags = parts.Select(x => new PartETag()

{

ETag = x.ETag,

ChecksumCRC32 = x.ChecksumCRC32,

ChecksumCRC32C = x.ChecksumCRC32C,

ChecksumCRC64NVME = x.ChecksumCRC64NVME,

ChecksumSHA1 = x.ChecksumSHA1,

ChecksumSHA256 = x.ChecksumSHA256,

PartNumber = x.PartNumber

}).ToList()

};

await _client.CompleteMultipartUploadAsync(completeRequest);

Step3: Wrap everything in a try-catch. This is to abort the upload request should anything go wrong

catch (Exception)

{

if (!string.IsNullOrEmpty(uploadId))

{

var abortRequest = new AbortMultipartUploadRequest

{

BucketName = _configuration.BucketName,

Key = storeId.ToString(),

UploadId = uploadId

};

await _client.AbortMultipartUploadAsync(abortRequest);

}

throw; // rethrow or handle

}